This is a technical article focused in to create, manually, a proper configuration to sent commands from your local environment to AWS EKS Cluster.

In AWS-EKS you will need that your local kubectl CLI can talks with your AWS EKS Cluster. Every thing is oriented to install AWS CLI. So What happen if you don’t want to install AWS CLI? In that case the configuration process to talk to the EKS cluster must be done manually. Let’s check what does it means.

Before that, you will need to know some technical concept about k8s and AWS. I suppose that you feel confortable or at least have basic knowledges in the following contents:

yaml definition 1.0

Should be interesting have a look to several lines at yaml definition 1.0 for example

In line 20 aws-iam-authenticator [command] [Flags:] it runs the cluster authentication using AWS IAM and get tokens for Kubernetes, in order to get there, we have our credential files with our access keys/secrets that we are gonna explain below.

We indicate below the location/name of our credentials file and its content.

~/.aws/credentials

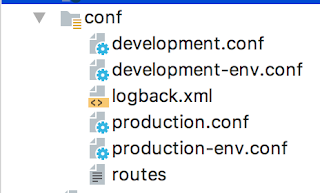

config definition 1.0

In config definition 1.0 I indicate the structure of the credential files but you can find more about manage access keys in IAM documentation for manage access keys. In the picture below you can find in an easy way how create keys/secrets in your AWS IAM account. The same key/secrets/user_in_IAM used to create your EKS Cluster.

In reference to the aforementioned file yaml definition 1.0 we need to get the server endpoint(line 4): <endpoint-url> in reference to below picture pay attention to Api server endpoint and the certificate-authority-data(line 5): <base64-encoded-ca-cert> in reference to below picture have a look to Certificate Authority.

Before that, you will need to know some technical concept about k8s and AWS. I suppose that you feel confortable or at least have basic knowledges in the following contents:

- AWS IAM: remember that you shouldn't use your AWS account root user.

- Kubectl CLI: command line interface for running commands against Kubernetes clusters that must be installed on your local environment.

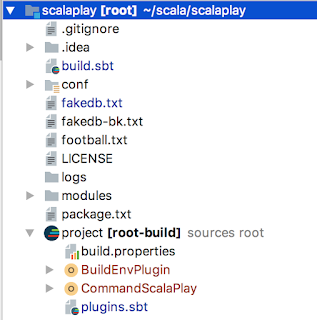

Our utter configuration is composed for 2 files in our local environment:

You can find a proper installation guide of aws-iam-authenticator or try with the following steps:

the below lines are referred to OSX but if you are working with linux then you will need to change in line 3 the bash file instead of .bash_profile should be .bashrc

Testing your above aws-iam-authenticator installation: aws-iam-authenticator help

Now we are going to indicate the location/name of our kubconfig file and its content.

- ~/.kube/config: We have a config file, usually KUBECONFIG, this file is used to configure access to clusters . It is a document like every definitions document in k8s with fields like (apiVersion, kind, etc) so the first thing that you need to know is the location of the cluster and the credentials to access it.

- ~/.aws/credentials: These are the credentials with which I going to create my cluster, authenticated with my IAM account.

You can find a proper installation guide of aws-iam-authenticator or try with the following steps:

the below lines are referred to OSX but if you are working with linux then you will need to change in line 3 the bash file instead of .bash_profile should be .bashrc

1 2 3 | curl -o aws-iam-authenticator https://amazon-eks.s3-us-west-2.amazonaws.com/1.13.7/2019-06-11/bin/darwin/amd64/aws-iam-authenticator chmod +x ./aws-iam-authenticator mkdir -p $HOME/bin && cp ./aws-iam-authenticator $HOME/bin/aws-iam-authenticator && echo 'export PATH=$HOME/bin:$PATH' >> ~/.bash_profile |

Testing your above aws-iam-authenticator installation: aws-iam-authenticator help

Now we are going to indicate the location/name of our kubconfig file and its content.

~/.kube/config

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | apiVersion: v1

clusters:

- cluster:

server: <endpoint-url>

certificate-authority-data: <base64-encoded-ca-cert>

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: aws

name: aws

current-context: aws

kind: Config

preferences: {}

users:

- name: aws

user:

exec:

apiVersion: client.authentication.k8s.io/v1alpha1

command: aws-iam-authenticator

args:

- token

- -i

- my_cluster_name

# - -r

# - arn:aws:iam::835499627031:role/eksServiceRole

env:

- name: AWS_PROFILE

value: my_profile_name

|

Should be interesting have a look to several lines at yaml definition 1.0 for example

In line 20 aws-iam-authenticator [command] [Flags:] it runs the cluster authentication using AWS IAM and get tokens for Kubernetes, in order to get there, we have our credential files with our access keys/secrets that we are gonna explain below.

We indicate below the location/name of our credentials file and its content.

~/.aws/credentials

[my_user_in_IAM]

aws_access_key_id=generated_aws_access_key

aws_secret_access_key=generated_aws_secret_access_key

In config definition 1.0 I indicate the structure of the credential files but you can find more about manage access keys in IAM documentation for manage access keys. In the picture below you can find in an easy way how create keys/secrets in your AWS IAM account. The same key/secrets/user_in_IAM used to create your EKS Cluster.

In reference to the aforementioned file yaml definition 1.0 we need to get the server endpoint(line 4): <endpoint-url> in reference to below picture pay attention to Api server endpoint and the certificate-authority-data(line 5): <base64-encoded-ca-cert> in reference to below picture have a look to Certificate Authority.

This is just the configuration process, you will need to modify the kubeconfig file (~/.kube/config) every time that you create a new cluster, it can be done by command or modifying the kubeconfig file manually. I think that you should do this process manually at least few times because it will help you to understand it quite well.

So you can decide, from my point of view, when I am working in Linux environment I run eksctl for cluster creation process but when I am working in OS X, I prefer to create the cluster and worker nodes through the aws console, it is just an opinion. So in that case(OS X) I don't install aws CLI. It is enough with ~/.kube/config, ~/.aws/credentials and the installation of aws-iam-authenticator.